(Overview of a project co-funded by Innovate UK as part of the Digital Health Technology Catalyst funding competition. The project was conducted by Body Aspect with support from academic partners . This blog provides a summary from Body Aspect's perspective. A more detailed academic report / publication is to follow later).

We see virtual reality as a natural extension to our previous Research and Development. Having progressed from 2D body outlines to realistic 3D bodies, virtual reality (VR) takes it to the next dimension, by immersing the user in a virtual environment.

During this project, Body Aspect collaborated with local academics to explore the feasibility of developing practical tools for eating disorders and obesity that utilise virtual reality and 3D body scanning. The project team included experienced Human Computer Interaction experts from The University of Nottingham, and a clinical psychologist from Nottingham Trent University who has practical experience and expertise in the field of eating disorders.

User requirements work package - overview.

We were aware of a growing body of research supporting the use of VR for eating disorders and wanted to explore this in more detail and move towards a specification of what a package of tools might look like. And we wanted to put users at the centre of our project, so we recruited four cohorts of 6-10 participants comprising professionals and people with lived experience from each of the two areas of eating disorders and obesity. Participants were involved in two phases of workshops and a 3D body scanning session.

The first phase introduced participants to virtual reality and involved discussions about the potential use of VR and 3D body scanning. The second exercise allowed participants to have a 3D body scan, and to use our software to create a 3D image of their perceived body. In the final phase there would be a greater range of VR applications to try; an opportunity to see their own body as an avatar; and to see the comparison of their perceived vs actual body.

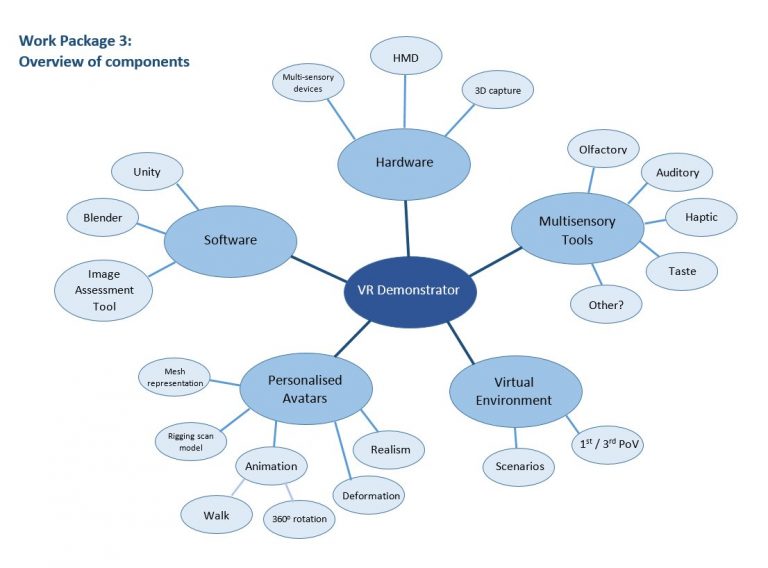

Technical investigation work package - overview

In parallel with the user requirements work package, technical aspects were investigated throughout the project and enabled us to create software demonstrators that could be used by participants during the workshops.

We developed the VR demonstrators using the Unity platform. We used two HTC Vive VR systems during our workshops, and a SizeStream 3D body scanner. In terms of the multi-sensory elements, we decided that the most interesting new aspect to explore would be the use of smell. And we would be converting participants' body scans into avatars (so the VR applications would not only use the more common first-person perspective but would also offer an opportunity to explore the third-person perspective whereby a participant would see their own body in a scene).

Session 1 - Workshop - Introduction to VR

The first workshop introduced participants to Body Aspect's 3D image assessment technology; and demonstrated a multi-sensory Virtual Reality kitchen simulation (pizza smell included!).

So the simulation went like this.

People generally liked using the virtual reality; there is no doubt that the first time you put on a head mounted display it can be quite a profound experience. One or two people experienced dizziness or sickness (which can be partly down to the nature of VR itself and partly down to the performance of the system on the day if the graphics are a bit jittery). The smell element did evoke emotional responses and is something that will enhance future VR systems, but currently it's not supported in VR systems. The VR environment was described by some as a safe space; so users experience the session as if they are in the real environment, which provides the opportunity to explore immediate emotions and thought processes at their own pace.

Session 2 - Body scan & image assessment software

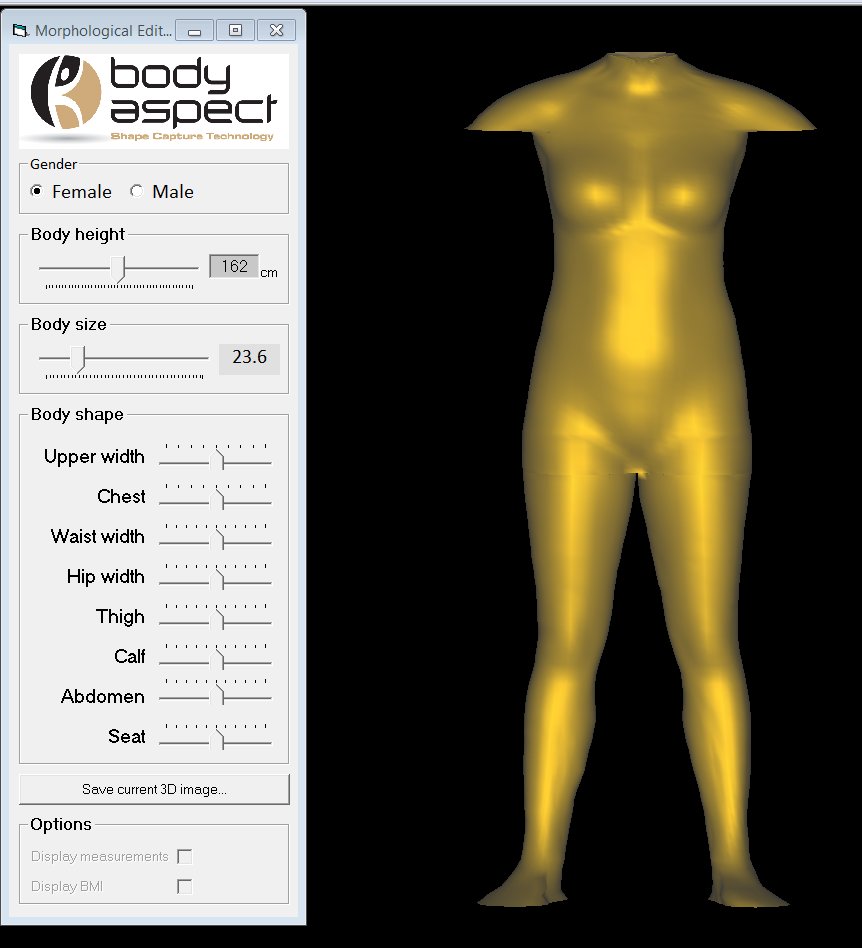

The body scan session used the SizeStream infra-red body scanner to capture a full body scan. Plus there was an opportunity to use Body Aspect's image assessment software to create a realistic perceived image.

The body scanner we use is shown in the above image. It is a curtained booth, and the subject stands still for about 7 seconds whilst 14 infra-red sensors capture points on the surface of the body. And the processed scan looks like the image below. We took two scans: one in underwear and one in close fitting clothes. The underwear scan provides more detail but not everyone is comfortable with this.

The lived experience cohort experienced a range of emotions to the body scan but there was an acknowledgement that it offers the opportunity to explore challenging thoughts and feelings. The professional cohort also identified the potential value of the scanning exercise but noted that it might not be appropriate for everyone, and that care would be needed. And this was consistent with a message throughout this project that different tools will be appropriate for different people at different stages.

The software used for creating a perceived image is shown below. The software uses a database of scans to create a realistic body for any height, weight combination. The user can then manipulate individual shape features.

Session 3 - Workshop - Intensive VR session

In the final phase of workshops we reviewed some of the previous sessions and provided new software exercises for participants to try. First, participants were able to view the images that had been produced at the previous session: their perceived image vs their actual scan.

The next exercise was a return to the kitchen scenario, except that in this case participants had the opportunity, if they agreed, to see their own body scan avatar in the scene. The idea was to explore their feelings towards the third person point of view, where the camera follows the subject.

The next exercise was a line-up of different sized avatars on a stage. The user could click on a particular avatar and it would move around. The idea here was to explore body awareness through a line-up of bodies of different sizes and shapes. During the discussions various adaptations of this idea were suggested: for example, that a user's own body scan could be included in the line-up; or that it could show the individual at different stages of progress.

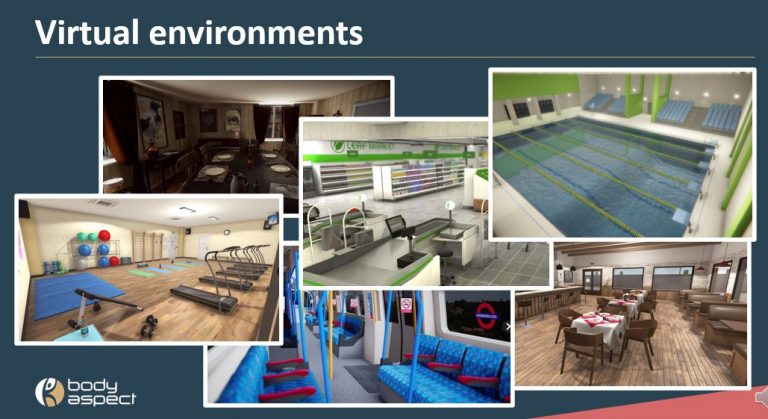

Finally, users were shown several possible virtual environments and we discussed which might be the most relevant scenarios to simulate.

There was consistent support for the use of virtual reality simulations. The types of scenarios most requested were supermarket shopping, dining at home or at a restaurant, and social situations. Participants felt that the software for creating realistic 3D perceived images could be useful for some people. And it was recognised that the use of 3D body scanning adds power to both above: being able to see their body scan avatar in a virtual environment adds another dimension and allows a user to experience 2nd and 3rd person perspectives; and comparing a perceived image to an actual body scan is an obvious way of illustrating and quantifying body image disturbances.

Towards BodyVR

There is a growing body of research that supports the use of virtual reality for eating disorders and obesity but there is a lack of software available to clinics and clinicians. This project has helped to clarify some user requirements and pointed towards a specification for a package of tools.

We want to develop technology that will help professionals with their current approaches, but also be incorporated into new interventions. The system that we are proposing comprises a combination of 3D Body Scanning + virtual reality equipment + software which we believe could assist in the following ways:

Please get in touch if you would like to know more. Meanwhile, a presentation about the project, delivered at the EDIC 2021 conference, is included here.